Facebook relies on a combination of artificial intelligence and human judgment to remove posts deemed offensive, violent, or otherwise unacceptable to its community standards — but precisely how the ultimate call to take down posts, pages, and groups are made remains unknown.

And Facebook takedowns, no matter the improvements to the process the social media behemoth claims to make, have been no less controversial or questionable — and those whose posts are censored have little if any recourse to argue their case.

Recent examples of head-scratchers which led to an international uproar, include Facebook’s removal of the iconic Vietnam War photograph of Phan Thị Kim Phúc — who, at just 9-years-old, was captured on film by an Associated Press photographer fleeing the aftermath of an errant napalm attack near a Buddhist pagoda in the village of Trang Bang.

That photograph helped cement in the collective American mind the horrors of the war, and ultimately fueled the success of the anti-war effort — but Facebook arbitrarily pulled the image for nudity — and proceeded even to ban the page of the Conservative prime minister of Norway for also posting the image.

Ultimately, the social media company reversed course in that case — but not before also taking down the equally iconic image of civil rights leader Rosa Park’s arrest.

But taking down of the image of Kim Phúc might not have been simply an error of AI, since it had been used as a specific example in training the teams responsible for content removal, two unnamed former Facebook employees told Reuters.

“Trainers told content-monitoring staffers that the photo violated Facebook policy, despite its historical significance, because it depicted a naked child, in distress, photographed without her consent, the employees told Reuters.”

In the final decision to reverse that censorship, Facebook head of the community operations division, Justin Osofsky, admitted it had been a “mistake.”

According to Reuters, to whom many current and former Facebook employees spoke on condition of anonymity, the process of judging which posts deserve to be remove and which should be allowed will, in certain instances, be left to the discretion of a small cadre of the company’s elite executives.

In addition to Osofsky, Global Policy Chief Monika Bickert; government relations chief, Joel Kaplan; vice president for public policy and communications, Elliot Schrage; and Facebook Chief Operating Officer Sheryl Sandberg make the final call on censorship and appeals.

“All five studied at Harvard, and four of them have both undergraduate and graduate degrees from the elite institution. All but Sandberg hold law degrees. Three of the executives have longstanding personal ties to Sandberg,” the outlet notes. Chief Executive Mark Zuckerberg also occasionally offers guidance in difficult decisions. But there are others.

Company spokeswoman Christine Chen explained, “Facebook has a broad, diverse and global network involved in content policy and enforcement, with different managers and senior executives being pulled in depending on the region and the issue at hand.”

For those on the receiving end of what could only be described as lopsided and inexplicable censorship, recourse is generally limited and can be nearly impossible to come by. Often, the nature of posts and pages removed insinuates political motivations on the part of the censors.

Indeed, and once again flaring international controversy, Facebook disabled, among others, the accounts of editors of Quds and Shehab New Agency — prominent Palestinian media organizations — without explanation or even a specific example given for justification.

Although three of four Palestinian-focused accounts were restored, Facebook refused to comment to either Reuters or the accounts’ owners why the decision was reversed, except to say it had been an ‘error.’

In fact, although Chen and other Facebook insiders spoke with Reuters directly about contentious content removal policies and procedures, many details of the processes remain covert and sorely intransparent to the public who is so often forced to cope with the consequences.

Earlier this year, an exposé by Gizmodo showing Facebook’s suppression of conservative outlets via its “Trending Topics” section appeared to evidence extreme bias in favor of liberal and corporate media mainstays. Alternative media, too, which provides reports counter to the mainstream political and foreign policy paradigm, has often been the subject of controversial take-downs, censorship, and suppressive tactics — either directly by Facebook, or through convoluted algorithms and artificial intelligence bots.

However, considering Sheryl Sandberg and her loyalists populate the top-level group deciding the fate for content removal complaints, it would appear Wikileaks could provide answers for both post censorship and suppression of outlets not vowing complete fealty to the preferred, left-leaning narrative.

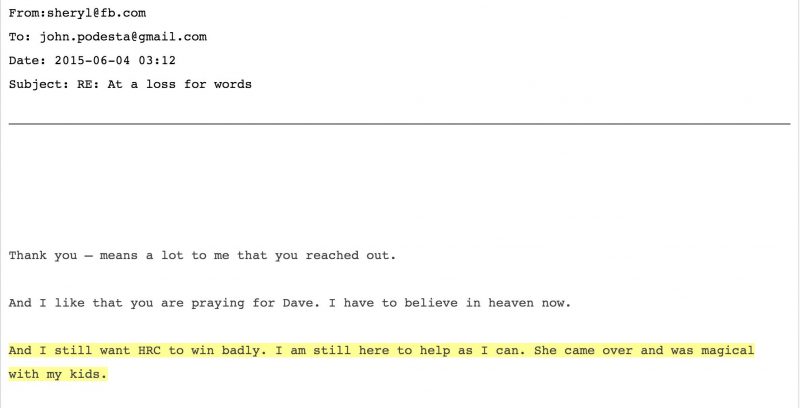

In a June 4, 2015, email to Clinton campaign chair John Podesta — an enormous cache of whose emails are still being published on a daily basis by Wikileaks — penned by Sandberg in response to condolences on the death of her husband, states, in part, “And I still want HRC to win badly. I am still here to help as I can. She came over and was magical with my kids.”

After a wave of post removals and temporary page bans, it appears Facebook has begun to come to its senses for what actually violates community standards — and what might have political worth contrary to the views of its executives.

Senior members of Facebook’s policy team recently posted about the laxing of rules governing community standards, which — though welcome — might only provide temporary relief. Quoted by the Wall Street Journal, they wrote:

“In the weeks ahead, we’re going to begin allowing more items that people find newsworthy, significant, or important to the public interest—even if they might otherwise violate our standards.”

While the social media giant deems itself a technology, and not news, platform, Facebook is still the bouncing off point for issues of interest for an overwhelming percentage of its users. Although it perhaps has some responsibility in regard to the removal of certain content, putting censorship in the hands of only a few individuals in certain instances is a chilling reminder of the fragility — and grave importance — of free speech.

This article is free and open source, originally published at The Free Thought Project.